Earlier this year, Apple announced a string of new initiatives aimed at creating a safer environment for young kids and teens using the company’s devices. Besides making it easier to set up kids’ accounts, the company plans to give parents the option of sharing their children’s age with app developers so as to be able to control what content they show.

Apple says these updates will be made available to parents and developers later this year. In this post, we break down the pros and cons of the new measures. We also touch on what Instagram, Facebook (and the rest of Meta) have to do with it, and discuss how the tech giants are trying to pass the buck on young users’ mental health.

Before the updates: how Apple protects kids right now

Before we talk about Apple’s future innovations, let’s quickly review the parental control status quo on Apple devices. The company introduced its first parental controls way back in June 2009 with the release of the iPhone 3.0, and has been developing them bit by bit ever since.

As things stand, users under 13 must have a special Child Account. These accounts allow parents to access the parental control features built into Apple’s operating systems. Teenagers can continue using a Child Account until the age of 18, as their parents see fit.

What Apple’s Child Account management center currently looks like. Source

Now for the new stuff…

The company has announced a series of changes to its Child Account system related to how parental status is verified. Additionally, it’ll soon be possible to edit a child’s age if it was entered incorrectly. Previously, for accounts of users under 13, it wasn’t even an option: Apple suggested waiting “for the account to naturally age up”. In borderline cases (accounts of kids just under 13), you could try a workaround involving changing the birth date — but such tricks won’t be needed for much longer.

But perhaps the most significant innovation relates to simplifying the creation of these Child Accounts. Henceforth, if parents don’t set up a device before their under-13-year-old starts using it, the child can do it themselves. In this case, Apple will automatically apply age-appropriate web content filters and only allow pre-installed apps, such as Notes, Pages, and Keynote.

Upon visiting the App Store for the first time to download an app, the child will be prompted to ask a parent to complete the setup. On the other hand, until parental consent is given, neither app developers nor Apple itself can collect data on the child.

At this point, even the least tech-savvy parent might ask the logical question: what if my child enters the wrong age during setup? Say, not 10, but 18. Won’t the deepest, darkest corners of the internet be opened up to them?

How Apple intends to solve the age verification issue

The single most substantial of Apple’s new initiatives announced in early 2025 attempts to address the problem of online age verification. The company proposes the following solution: parents will be able to select an age category and authorize sharing this information with app developers during installation or registration.

This way, instead of relying on young users to enter their date-of-birth honestly, developers will be able to use the new Declared Age Range API. In theory, app creators will also be able to use age information to steer their recommendation algorithms away from inappropriate content.

Through the API, developers will only know a child’s age category — not their exact date of birth. Apple has also stated that parents will be able to revoke permission to share age information at any time.

In practice, access to the age category will become yet another permission that young users will be able to give (or, more likely, not give) to apps — just like permissions to access the camera and microphone, or to track user actions across apps.

This is where the main flaw of the proposed solution lies. At present, Apple has given no guarantee that if a user denies permission for age-category access, they won’t be able to use a downloaded app. This decision rests with app developers, as there are no legal consequences for allowing children access to inappropriate content. Moreover, many companies are actively seeking to grow their young audience, since young kids and teens spend a lot of their time online (more on this below).

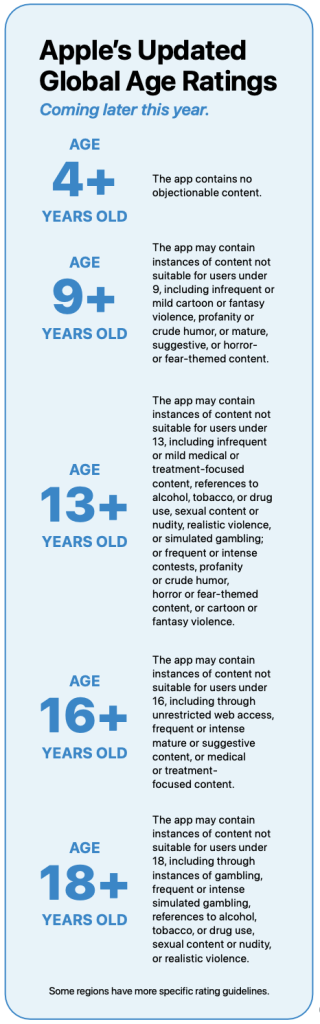

Finally, let’s mention Apple’s latest innovation: its updating its age-rating system. It will now consist of five categories: 4+, 9+, 13+, 16+, and 18+. In the company’s own words, “This will allow users a more granular understanding of an app’s appropriateness, and developers a more precise way to rate their apps”.

Apple is updating its age rating system — it will comprise five categories. Source

Apple and Meta disagree over who’s responsible for children’s safety online

The problem of verifying a young person’s age online has long been a hot topic. The idea of showing ID every time you want to use an app is, naturally, hardly a crowd-pleaser.

At the same time, taking all users at their word is asking for trouble. After all, even an 11-year-old can figure out how to edit their age in order to register on TikTok, Instagram, or Facebook.

App developers and app stores are all too eager to lay the responsibility for verifying a child’s age at anyone else’s doorstep but their own. Among app developers, Meta is particularly vocal in advocating that age verification is the duty of app stores. And app stores (especially Apple’s) insist that the buck stops with app developers.

Many view Apple’s new initiatives on this matter as a compromise. Meta itself has this to say:

“Parents tell us they want to have the final say over the apps their teens use, and that’s why we support legislation that requires app stores to verify a child’s age and get a parent’s approval before their child downloads an app”.

All very well on paper — but can it be trusted?

Child safety isn’t the priority: why you shouldn’t trust tech giants

Entrusting kids’ online safety to companies that directly profit from the addictive nature of their products doesn’t seem like the best approach. Leaks from Meta, whose statements on Apple’s solution we cited above, have repeatedly shown that the company targets young users deliberately.

For example, in her book Careless People, Sarah Wynne-Williams, former global public policy director at Facebook (now Meta), recounts how in 2017 she learned that the company was inviting advertisers to target teens aged 13 to 17 across all its platforms, including Instagram.

At the time, Facebook was selling the chance to show ads to youngsters at their most psychologically vulnerable — when they felt “worthless”, “insecure”, “stressed”, “defeated”, “anxious”, “stupid”, “useless”, and/or “like a failure”. In practice, this meant, for example, that the company would track when teenage girls deleted selfies to then show them ads for beauty products.

Another leak revealed that Facebook was actively hiring new employees to develop products aimed at kids as young as six, with the goal of expanding its consumer base. It’s all a bit reminiscent of tobacco companies’ best practices back in the 1960s.

Apple has never particularly prioritized kids’ online safety, either. For a long time its parental controls were quite limited, and kids themselves were quick to exploit holes in them.

It wasn’t until 2024 that Apple finally closed a vulnerability allowing kids to bypass controls just by entering a specific nonsensical phrase in the Safari address bar. That was all it took to disable Screen Time controls for Safari — giving kids access to any website. The vulnerability was first reported back in 2021, yet it took three years for the company to react.

Content control: what really helps parents

Child psychology experts agree that unlimited consumption of digital content is bad for children’s psychological and physical health. In his 2024 book The Anxious Generation, US psychologist Jonathan Haidt describes how smartphone and social media use among teenage girls can lead to depression, anxiety, and even self-harm. As for boys, Haidt points to the dangers of overexposure to video games and pornography during their formative years.

Apple may have taken a step in the right direction, but it’ll be for nothing if third-party app developers decide not to play ball. And as the example of Meta illustrates, relying on their honesty and integrity seems premature.

Therefore, despite Apple’s innovations, if you need a helping hand, you’ll find one… at the end of your own arm. If you want to maintain control over what and how much your child consumes online with minimal interference in their life, look no further than our parental control solution.

Kaspersky Safe Kids lets you view reports detailing your child’s activity in apps and online in general. You can use these to customize restrictions and prevent digital addiction by filtering out inappropriate content in search results and, if necessary, blocking specific sites and apps.

What other online threats do kids face, and how to neutralize them? Essential reading:

kids

kids