A recent publication by researchers at the University of California, Irvine, demonstrates a fascinating fact: optical sensors in computer mice have become so sensitive that, in addition to tracking surface movements, they can pick up even minute vibrations — for instance, those generated by a nearby conversation. The theoretical attack, dubbed “Mic-E-Mouse”, could potentially allow adversaries to listen in on discussions in “secure” rooms, provided the attacker can somehow intercept the data transmitted by the mouse. As is often the case with academic papers of this kind, the proposed method comes with quite a few limitations.

Specifics of the Mic-E-Mouse attack

Let’s be clear from the start — not just any old mouse will work for this attack. It specifically requires models with the most sensitive optical sensors. Such a sensor is essentially an extremely simplified video camera that films the surface of the desk at a resolution of 16×16 or 32×32 pixels. The mouse’s internal circuitry compares consecutive frames to determine how far and in which direction the mouse has moved. How often these snapshots are taken determines the mouse’s final resolution, expressed in dots per inch (DPI). The higher the DPI, the less the user has to move the mouse to position the cursor on the screen. There’s also a second metric: the polling rate — the frequency at which the mouse data is transmitted to the computer. A sensitive sensor in a mouse that transmits data infrequently is of no use. For the Mic-E-Mouse attack to even be feasible, the mouse needs both a high resolution (10 000DPI or more) and a high polling rate (4000Hz or more).

Why do these particular specifications matter? Human speech, which the researchers intended to eavesdrop on, is audible in a frequency range of approximately 100 to 6000Hz. Speech causes sound waves, which create vibrations on the surfaces of nearby objects. Capturing these vibrations requires an extremely precise sensor, and the data coming from it must be transmitted to the PC in the most complete form possible — with the data update frequency being most critical. According to the Nyquist–Shannon sampling theorem, an analog signal within a specific frequency range can be digitized if the sampling rate is at least twice the highest frequency of the signal. Consequently, a mouse transmitting data at 4000Hz can theoretically capture an audio frequency range up to a maximum of 2000Hz. But what kind of recording can a mouse capture anyway? Let’s take a look.

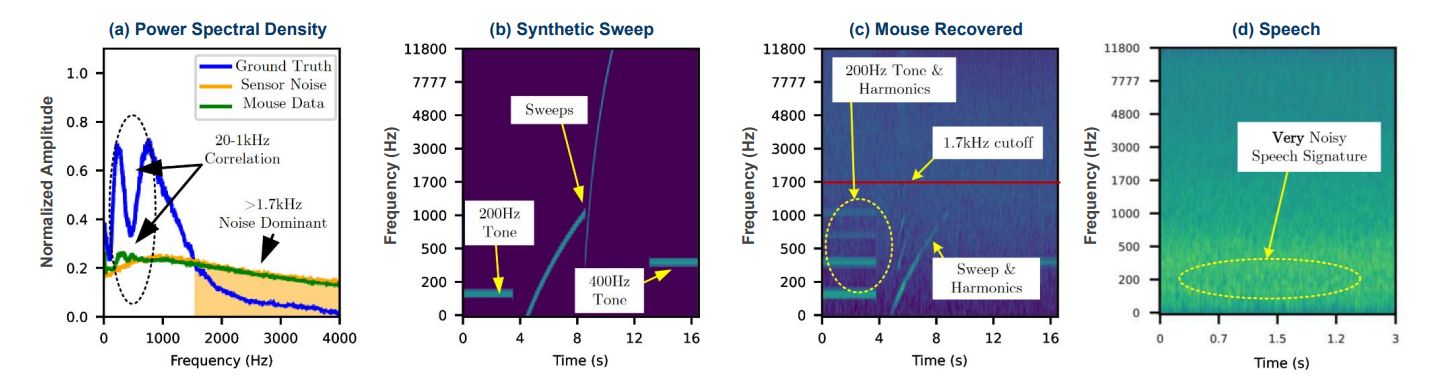

Results of the study on the sensitivity of a computer mouse’s optical sensor for capturing audio information. Source

In graph (a), the blue color shows the frequency response typical of human speech — this is the source data. Green represents what was captured using the computer mouse. The yellow represents the noise level. The green corresponds very poorly to the original audio information and is almost completely drowned in noise. The same is shown in a spectral view in graph (d). It looks as though it’s impossible to recover anything at all from this information. However, let’s look at graphs (b) and (c). The former shows the original test signals: tones at 200 and 400Hz, as well as a variable frequency signal from 20 to 16 000Hz. The latter shows the same signals, but captured by the computer mouse’s sensor. It’s clear that some information is preserved, although frequencies above 1700Hz can’t be intercepted.

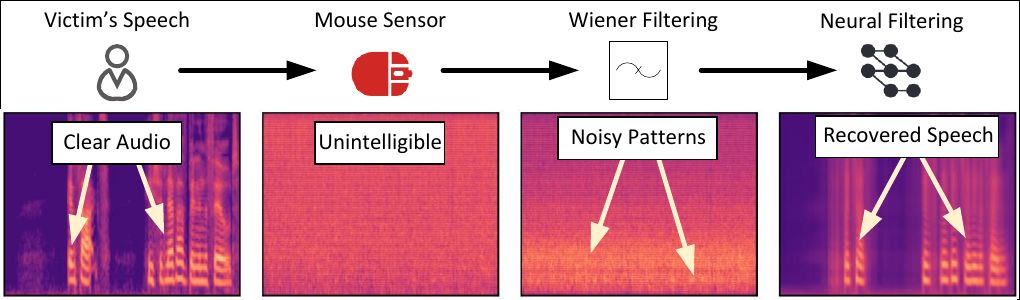

Two different filtering methods were applied to this extremely noisy data. First, the well-known Wiener filtering method, and second, filtering using a machine-learning system trained on clean voice data. Here’s the result.

Spectral analysis of the audio signal at different stages of filtering. Source

Shown here from left to right are: the source signal, the raw data from the mouse sensor (with maximum noise), and the two filtering stages. The result is something very closely resembling the source material.

So what kind of attack could be built based on such a recording? The researchers propose the following scenario: two people are holding a conversation in a secure room with a PC in it. The sound of their speech causes air vibrations, which are transmitted to the tabletop, and from the tabletop to the mouse connected to the PC. Malware installed on the PC intercepts the data from the mouse, and sends it to the attackers’ server. There, the signal is processed and filtered to fully reconstruct the speech. Sounds rather horrifying, doesn’t it? Fortunately, this scenario has many issues.

Severe limitations

The key advantage of this method is the unusual attack vector. Obtaining data from the mouse requires no special privileges, meaning security solutions may not even detect the eavesdropping. However, not many applications access detailed data from a mouse, which means the attack would require either writing custom software, or hacking/modifying specialized software that is capable of using such data.

Furthermore, there are currently not many mice models with the required specifications (resolution of 10 000DPI or higher, and polling rate of 4000Hz or more). The researchers found about a dozen potential candidates and tested the attack on two models. These weren’t the most expensive devices — for instance, the Razer Viper 8KHz costs around $50 — but they are gaming mice, which are unlikely to be found connected to a typical workstation. Thus, the Mic-E-Mouse attack is future-proof rather than present-proof: the researchers assume that, over time, high-resolution sensors will become standard even in the most common office models.

The accuracy of the method is low as well. At best, the researchers managed to recognize only 50 to 60 percent of the source material. Finally, we need to consider that for the sake of the experiment, the researchers attempted to simplify their task as much as possible. Instead of capturing a real conversation, they were playing back human speech through computer speakers. A cardboard box with an opening was placed on top of the speakers. This opening was covered with a membrane with the mouse on top of it. This means the sound source was not only artificial, but also located mere inches from the optical sensor! The authors of the paper tried covering the hole with a thin sheet of paper or cardboard, and the recognition accuracy immediately plummeted to unacceptable levels of 10–30%. Reliable transmission of vibrations through a thick tabletop isn’t even a consideration.

Cautious optimism and security model

Credit where it’s due: the researchers found yet another attack vector that exploits unexpected hardware properties — something no one had previously thought of. For a first attempt, the result is remarkable, and the potential for further research is undoubtedly there. After all, the U.S. researchers only used machine learning for signal filtering. The reconstructed audio data was then listened to by human observers. What if neural networks were also used for speech recognition?

Of course, such studies have an extremely narrow practical application. For organizations whose security model must account for even such paranoid scenarios, the authors of the study propose a series of protective measures. For one, you can simply ban connecting mice with high-resolution sensors — both through organizational policies and, technically, by blocklisting specific models. You can also provide employees with mousepads that dampen vibrations. The more relevant conclusion, however, concerns protection against malware: attackers can sometimes utilize completely atypical software features to cause harm — in this case, for espionage. So it’s worth identifying and analyzing even such complex cases; otherwise, it may later be impossible to even determine how a data leak occurred.

side-channel attacks

side-channel attacks