In November 2022, the U.S. National Security Agency issued a bulletin on RAM handling security. If you look at other NSA bulletins on the topic, you’ll notice that they mostly focus on either data encryption, or production loop protection and other organizational issues. Addressing software developers directly is quite an unusual move for the agency. But since it’s been done, it’s clearly about something particularly important. Basically, the NSA is urging software developers to switch to programming languages whose architecture implies increased safety when working with memory. And in fact, to stop using C and C++. Otherwise, it’s recommended that a set of measures be implemented to test software for vulnerabilities and prevent their exploitation.

For programmers, these are fairly obvious things, and the NSA’s call is not directly addressed to them, but rather to management or business representatives. It was drafted in wording clear to businesses. Let’s try and analyze the arguments presented in it without being too technical.

Memory safety

Let’s open our latest Report on threat evolution for Q3 2022 and take a look at the vulnerabilities most commonly used in cyberattacks. In the first line there’s still the CVE-2018-0802 vulnerability in the Equation Editor component of the Microsoft Office suite, discovered back in 2018. It’s caused by incorrect data processing in RAM, as a result of which opening a malicious Microsoft Word document could lead to the launch of arbitrary code. Another vulnerability popular with criminals is CVE-2022-2294 in the WebRTC component of the Google Chrome browser. It leads to execution of arbitrary code as a result of a buffer overflow error. Another vulnerability – CVE-2022-2624 – contained in Chrome’s PDF viewer tool, may also lead to buffer overflow.

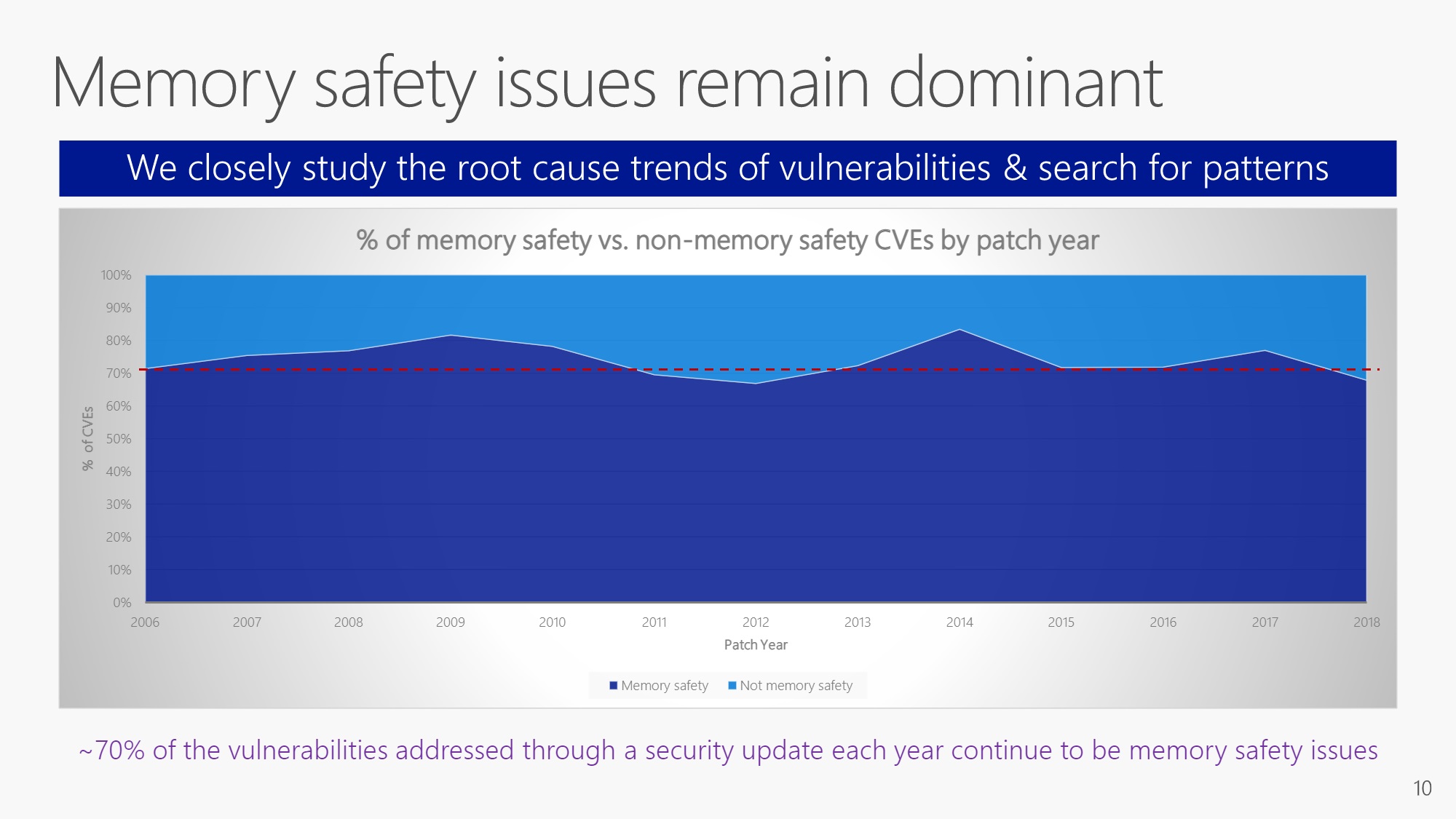

Of course, not all software vulnerabilities are caused by insecure RAM handling, but many of them are. The NSA Bulletin cites Microsoft’s statistics that memory-handling errors cause 70% of discovered vulnerabilities.

According to Microsoft statistics, two-thirds of vulnerabilities happen due to memory bugs. Source.

Why does this happen? If the issue of memory leaks is so serious, why can’t we somehow get ourselves together and stop writing vulnerable code? The root of the problem is the use of the C and C++ programming languages. Their architecture gives developers a lot of freedom in working with RAM. But together with freedom comes responsibility. C/C++ programmers have to implement mechanisms for safe data writing and reading themselves. At the same time, high-level programming languages such as C#, Rust, Go, and others take care of that. The point is that when compiling the program source code, the means of safe memory handling are automatically introduced, and developers don’t need to spend time on that. Rust uses even more means to improve safety, up to restricting potentially dangerous code from compiling while displaying an error to the programmer.

Of course, simply giving up using C/C++ is not feasible while these languages remain indispensable for certain tasks, such as when code is needed for MCUs or other devices with serious limitations on computing power and memory size. Other things being equal, high-level programming languages may lead to the creation of more resource-intensive programs. But common threat stats show us that attacks most frequently target common user software (like browsers and text editors), which run on very powerful computers (compared to MCUs, of course).

You can’t just change the programming language

The NSA is well aware of this. A huge software database written in “unsafe” programming languages cannot be ported to another language overnight. Even if we are talking about writing a software product from scratch, there may well be an established team, infrastructure, and development methods around a particular programming language.

If you want an analogy, imagine being asked to move out of your house just because it was built a long time ago. You know that the structure is perfectly sound, and that it would only collapse give a major earthquake and, besides, you’re used to living there. The Google Chrome developers team has a post that explicitly states that they can’t right now switch to another programming language (in this case, Rust) in which security is built into the architecture. It could be possible in the future. But right now, they need other solutions.

The same Google Chrome developers’ post also explains why you can’t fundamentally change the security of C/C++ code. These programming languages simply weren’t designed to solve all compilation problems in one fell swoop. That’s why the NSA bulletin mentions two sets of measures as an alternative:

- Testing code for potential vulnerabilities with dynamic and static analysis techniques;

- Using features that prevent exploitation of a code error even if it’s already there.

Changeover challenges

Technical experts agree on the whole with the NSA’s opinion. Experts may have varying opinions on how exactly we can switch to high-level programming languages in cases when the need for that arises from, among other things, security requirements. Firstly, it’s important to understand that if such a move occurs, it’ll take many years. Secondly, evolution like this has a price – one not every business is prepared to pay. The problem of unsafe memory handling in programming languages with a low abstraction level is a systemic problem. Calling for a radical solution is necessary, but don’t expect everyone to be switching tomorrow to developing in C#, Go, Java, Ruby, Rust or Swift. Just like you could hardly make a whole city or country switch to… vegetarianism, or some other extreme change overnight.

Finally, the problem of insecure memory handling may be an enormous one, but it’s far from the only issue regarding software safety. In the several decades of the IT industry’s existence, it’s never been possible to create a universal, completely safe system for all tasks (except highly specialized solutions). From a business point of view, it makes sense to both invest in new technologies (by developing corresponding skills and hiring specialists with experience) and in maximum protection of existing technologies. For software development, it may be new programming languages and technologies for testing existing code. For any other business, we can talk about investing in new technologies to protect against cyberattacks, as well as constantly testing the strength of existing infrastructure. In other words, a comprehensive approach to safety is optimal, and will remain so for a long time to come.

vulnerabilities

vulnerabilities

Tips

Tips